Articles

AI doesn’t know how to say, I don’t know

AI search tools are wrong 60% of the time*.

Have a noodle on that.

If a search engine returned nonsense 6 times out of 10, we’d stop using it.

But with AI? We seem strangely willing to forgive it.

Maybe because it sounds confident.

Maybe because we don’t yet know what “good” looks like.

Or maybe we think we’re not prompting it correctly.

As a designer, I keep returning to one core idea:

We haven’t truly designed AI experiences.

We’ve just wrapped user interfaces around research prototypes (e.g. Google’s NotebookLM)

Take the standard AI chat format—

A prompt box. A reply. A reset.

No relationship. No growth.

It’s not an experience. It’s a rinse and repeat transaction.

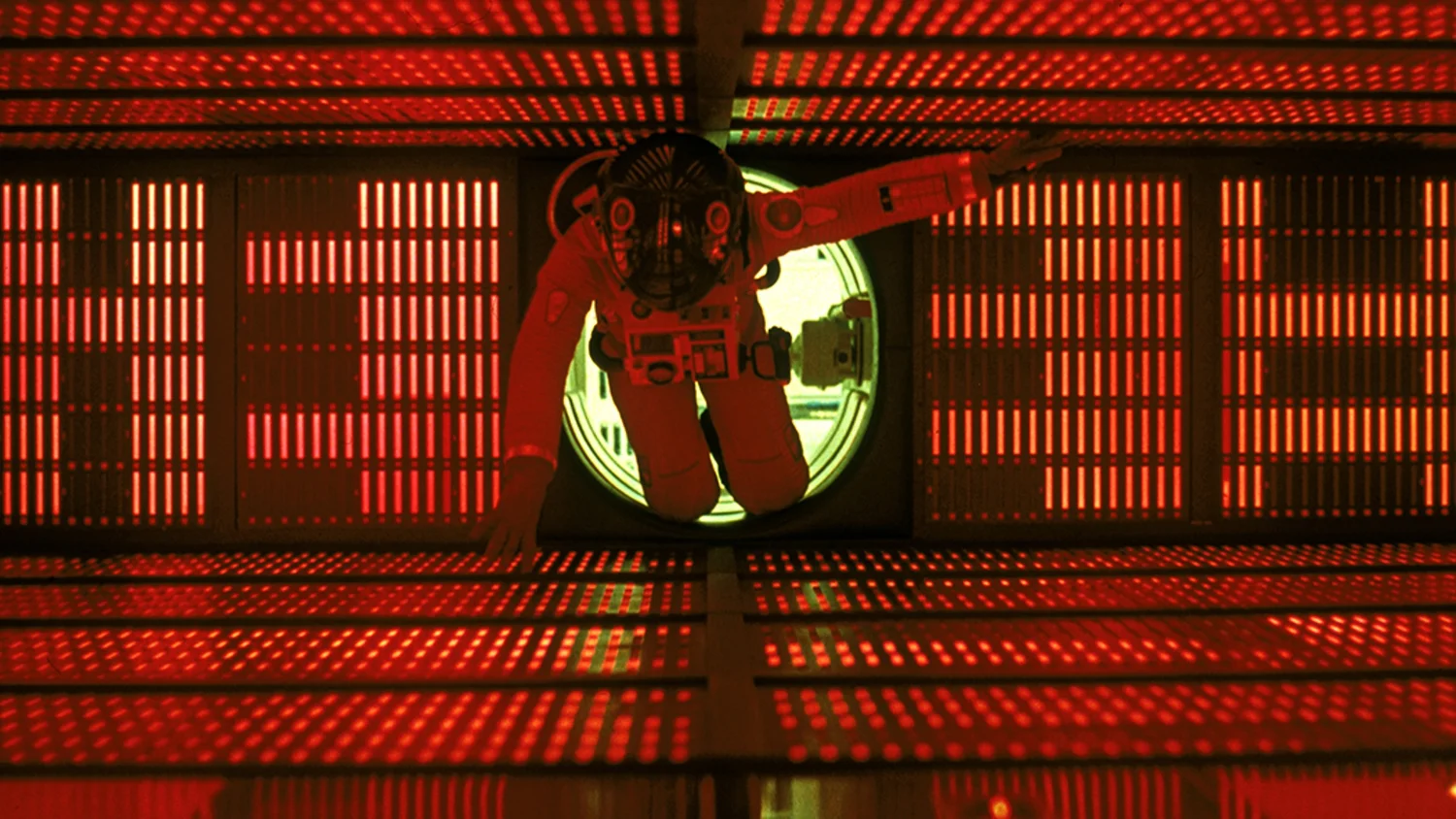

Whenever I see an AI confidently respond with made-up answers, I can’t help but think of HAL 9000 in 2001: A Space Odyssey—the moment it says:

“I’m sorry, Dave. I’m afraid I can’t do that.”

And Dave’s look of horror. It’s chilling.

HAL doesn’t explain. It doesn’t clarify. It just decides.

And that’s where we are with many AI tools today.

That’s not just a technical problem.

It’s a design flaw.

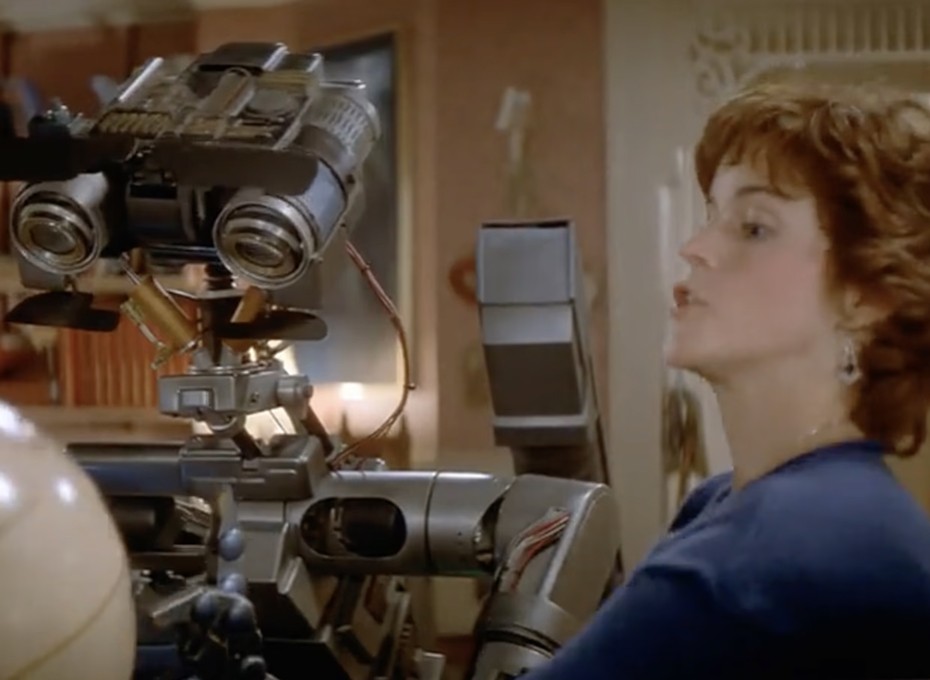

Most AI tools today behave like HAL.

They speak with authority but make up facts, skip over nuance, and never admit what they don’t know.

Some tools are beginning to break the pattern.

1. ChatGPT and Microsoft’s Copilot offer to work with predefined applications, allowing users a greater level of context and a shared source of truth.

2. Perplexity AI and others have started to show source links and confidence levels to indicate uncertainty—an important step toward transparency.

3. Meanwhile, good old form validation and error states still do more to build trust than most AI tools today.

The issue isn’t model accuracy —it’s the absence of human-computer interaction design.

Imagine an AI that said:

“I’m not sure about that. Would you like me to check another source?”

or

“Can you clarify what you mean by ‘best’? Are you looking for speed, cost, or reliability?”

Just like we trust people who admit when they’re unsure, we’d trust AI more if it signalled doubt** and asked better questions.

What if AI experiences were built around:

1. Personal data vaults: User-owned, verified collections of facts, preferences, and values that AIs can reference—like a source of truth unique to you.

2. Clarifying conversations: Systems that ask follow-up questions, check scope, and adapt the experience in real time.

3. Confidence signalling: Interfaces that don’t just look polished but communicate how certain (or uncertain) a result is.

As AI moves from lab demo to daily tool, we need to decide:

Ship fast and break trust?

Or design for clarity, humility, and collaboration?

How do we fix this? How are you meeting the challenge of AI adoption for your customers?